Introduction

As digital transformation accelerates, midstream oil and gas companies are leveraging the cloud to unlock their operational data. Cloud platforms provide advanced reporting and machine learning with unmatched scalability. However, integrating legacy systems with modern cloud solutions remains a challenge, especially when managing large volumes of diverse data across many assets.

One midstream oil and gas company struggled to move critical data from on-premises historian systems to the cloud in a usable format. The company needed to unify fragmented time series and contextual data, automate sensor mapping across thousands of assets, and scale to meet growing data demands. These challenges restricted data access, insights, and overall digital agility.

Challenge

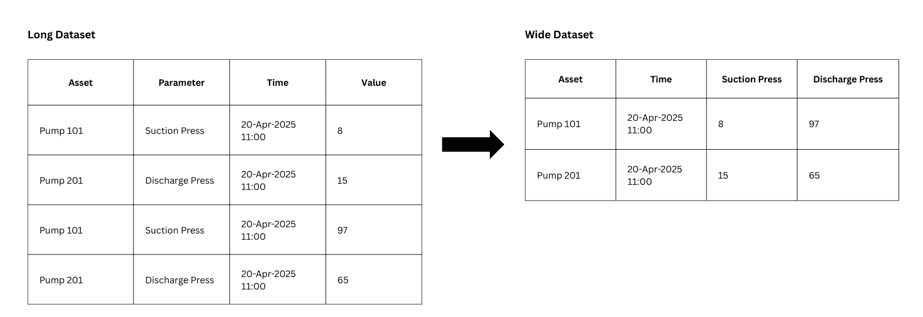

One key issue is information grouping. Historians retrieve data in separate, disconnected sets of time series and non-time series data (such as business units, facility name, location, and equipment type). Cloud systems cannot easily interpret this format. To work with the data, it needs to be grouped in a table format with a single timestamp per row. However, sensor data is not stored this way.

Once the information is formatted correctly, you must use cloud-based protocols like Kafka to transfer the data. Kafka acts as a queue, storing incoming data before sending it Databricks and similar systems for further processing.

Another challenge was the heavy reliance on manually specifying each sensor (attribute or tag), which added significant configuration and maintenance overhead. This became especially difficult at scale, with thousands of assets—such as meters, valves, chromatographs, compressors, and monitoring systems—and hundreds of thousands of sensors. Manually setting up or recreating each one slowed system deployment and reduced overall operational efficiency.

Additionally, concerns arose about the scalability of the infrastructure to handle the growing volume of data. As more assets were added to the system, maintaining and scaling performance became increasingly difficult. This often required setting up more systems and configurations, which is time-consuming, and significantly increased maintenance efforts.

Solution

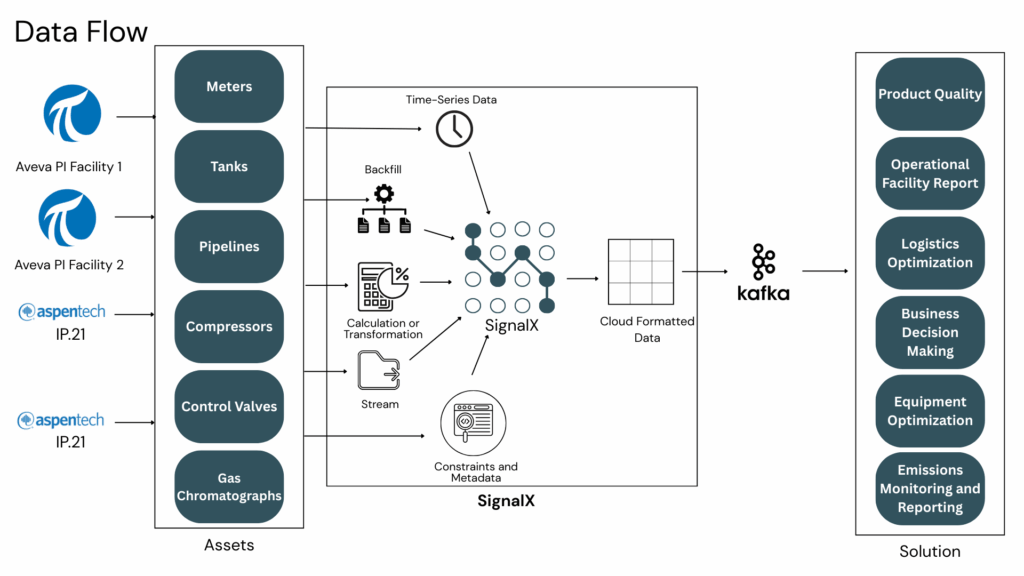

SignalX tackles key challenges in managing OT data. It removes the need to manually specify sensors—like gas, liquid, and power meters—cutting configuration and maintenance time. With thousands of assets and hundreds of thousands of sensors, manual setup quickly becomes tedious. SignalX’s asset search detects new assets, removes outdated ones, and streamlines the entire process—no need to specify individual sensors.

To address the complex challenges of integrating data from legacy historians systems into a modern cloud environment, the organization deployed SignalX—a no-code, point-and-click data integration platform purpose-built for operational data.

In this specific implementation, SignalX was used to connect to multiple on-premises historian systems, including:

- PI Systems

- Asset Framework (AF) Systems

- IP.21 Systems

- SignalX automatically sourced both time-series and contextual data from these environments. Its advanced sensor discovery and mapping tools removed the need to manually specify attributes or tags for hundreds of thousands of sensors. Instead, it used metadata from AF and IP.21 structures to auto-map sensors to their corresponding assets—like gas chromatographs, valves, compressors, and tanks—drastically cutting configuration time.

Once the data was extracted, SignalX normalized it into a structured, cloud-readable format—grouping disparate time-series and non-time-series data into a single, row-based table structure with unified timestamps per row. This transformation allowed for seamless ingestion into downstream systems.

SignalX then streamed this cleaned and contextualized data in near real time via Kafka, a high-throughput cloud messaging platform. This data was then ingested into Databricks.

Key features leveraged in this implementation included:

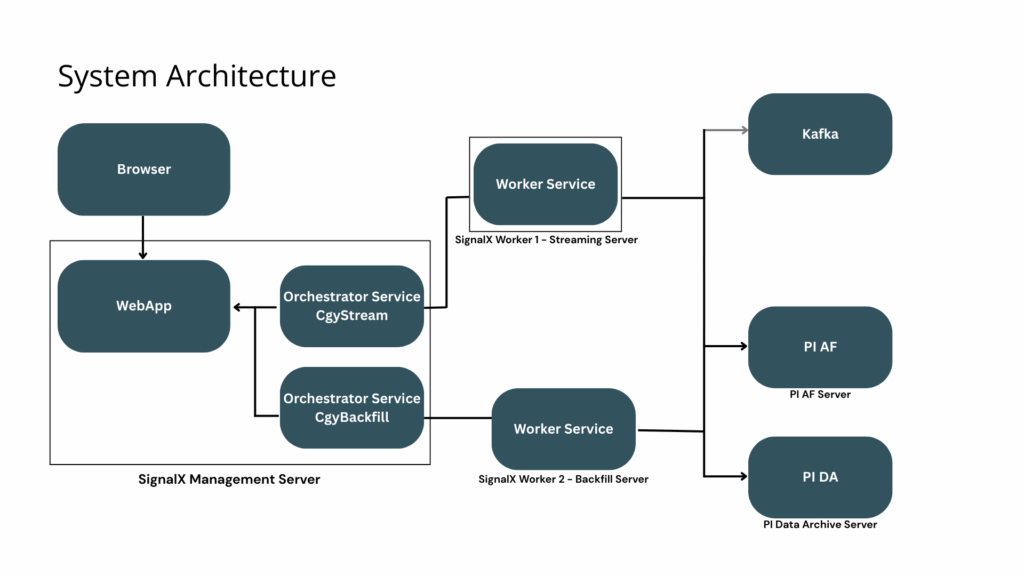

- Auto-scaling architecture: SignalX dynamically scales with the number of assets and data volume. As users connect more PI, AF, and IP.21 sources, SignalX automatically adds worker services to balance the load, requiring minimal manual effort.

- Zero-code configuration: OT personnel use the drag-and-drop interface to build and manage data pipelines without writing or maintaining custom scripts.

- Real-time streaming: SignalX streams data continuously through Kafka, ensuring timely delivery and eliminating delays from traditional batch ETL processes.

Even though it was not required in this deployment, SignalX also offers advanced capabilities for more complex network environments:

- Network proxying: SignalX can securely proxy data across segmented networks or firewall boundaries—ideal for air-gapped or isolated industrial environments.

- MQTT brokering: SignalX can function as a lightweight MQTT broker, allowing integration with IIoT devices and edge computing systems when needed.

Results – AVEVA PI and AF to Databricks

SignalX effectively addresses critical challenges in managing and utilizing data from operational technology (OT) systems. Specifically, it eliminates the heavy reliance on manually specifying sensors—such as gas meters, liquid meters, and power meters—thereby reducing both configuration and maintenance time. Moreover, with thousands of assets and hundreds of thousands of sensors, manually recreating each one becomes increasingly tedious. In addition, SignalX’s asset search automatically detects new assets and removes decommissioned ones, further streamlining the process and reducing complexity. As a result, there’s no need to specify individual sensors.

As scalability concerns grew, SignalX provided an efficient solution—scaling easily without complex setup. Its distributed architecture handles increased data loads and asset management by simply adding worker services. This blend of automation, efficiency, and scalability makes SignalX ideal for meeting growing data demands.

Contact Us

At MetaFactor, we understand the challenges of managing complex data and reporting systems. That’s why we recommend SignalX, our powerful data pipeline solution, to help you overcome these obstacles. SignalX is designed to streamline your data collection and reporting processes, automate asset detection, and significantly reduce maintenance costs.

If you’re looking for a highly scalable and flexible data integration solution which improves efficiency, and ensures faster, more reliable data insights, SignalX is the solution you’ve been waiting for.